Global vs. Local Data Flow Analysis: Crucial in ABAP Code Security

Organizations running SAP Applications in most cases implement extensive customizations in order to be able to map their business processes within the SAP technology. These customizations are ultimately millions of lines of ABAP code that is developed by humans and may contain security vulnerabilities, among other types of issues.

To ensure the security of the code that organizations create, a solution that integrates into the development lifecycle is key, so vulnerabilities are identified early on during development, and its mitigation is much less expensive than doing assessments in production.

When trying to detect those vulnerabilities, it always becomes critical if certain types of data can be manipulated by user input and this user input is not, or not sufficiently, validated before using it as input to the code. Some typical vulnerabilities that may arise from that are:

- SQL Injection

- Code Injection

- Directory Traversal

- OS Command Injection

If you want to identify these types of vulnerabilities (as well as others), the security product doing the assessment must execute a data flow analysis to determine whether user input is used as input for dynamic code without being validated before.

The most popular ABAP code security tool, Onapsis’ Control for Code ABAP(C4CA), can be triggered by developers on demand in the ABAP Workbench(SE80) or in the ABAP Development Toolkit(ADT). C4CA also provides real time support for developers in ADT. Most customers also trigger automatic checks during the release process of an object to ensure that every object is at least checked once and no (or no unauthorized) security vulnerability can reach production.

The market of code security analysis for ABAP code has some tools that also do flow analysis, considering all interfaces pointing externally as a potential user input. However, these tools differ in the scope of their data flow analysis and in the exactness of identifying real user inputs. This difference has a huge impact on the rate of false negatives and false positives.

Local Data Flow Analysis

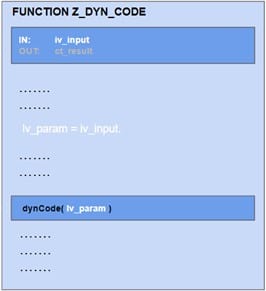

To detect vulnerabilities like SQL, Code, or Command Injections and Directory Traversals it is crucial to analyze the data flow between any externally exposed interface and the dynamic part of the code. The first example shows a scenario in which the input parameter iv_param of a function module is not directly provided to the dynamic code part. A data flow analysis detects that the value of iv_param is assigned to lv_param and lv_param is used as input in the dynamic code.

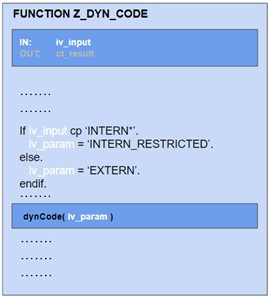

A data flow analysis is also required to prevent false positives. The following example should not generate a finding, since it recognizes that the value of the input parameter iv_param is checked before it is applied to the dynamic statement:

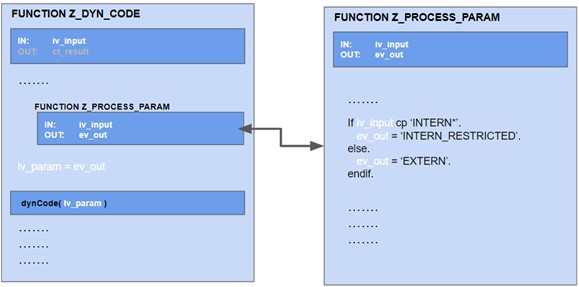

In real life, the data flow can be quite complex. The above check could be included in another function module:

Some tools in the market perform a local data flow analysis. A local data flow analysis can only detect the mitigation if the called function module Z_PROCESS_PARAM belongs to the same compilation unit (here: function group) as the calling function module Z_DYN_CODE. In other words:

For a local data flow analysis all directly or indirectly called modules that do not belong to the same compilation unit as the module of interest, are blind spots!

In the best case, the restrictions of a local data flow analysis lead to false positives. But there is also a risk of getting false negatives.

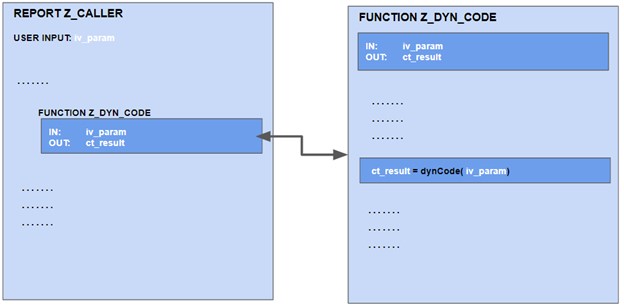

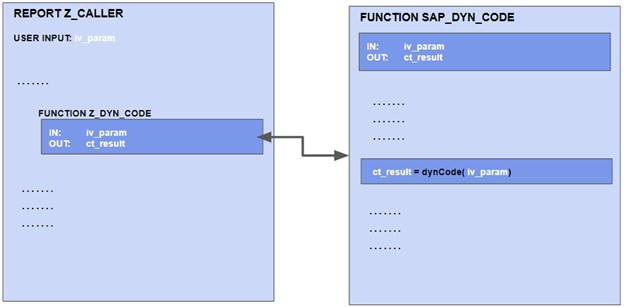

A false negative can occur if the dynamic code is in a called module that is not part of the scan scope. In the following example, the program Z_CALLER is checked for vulnerabilities. The program itself does not contain any dynamic code, but the called function module Z_DYN_CODE does and even worse, its input parameter is provided by user input in the calling program Z_CALLER.

Since the function module does not belong to the same compilation unit, its content is not analyzed in a local data flow and the critical situation is not detected!

One could expect that the problem in the function module Z_DYN_CODE was detected in the past since a function module that is called must have been checked already separately before (e.g. because it was transported before into the QA and/or production system). But here we are facing a general problem of local data flow analysis.

If I integrate modules from other developers, departments or companies, I have to rely on someone else’s decision on whether a detected finding is considered critical or not.

Such a decision is enforced at the release process since transports are usually blocked if error findings are neither mitigated nor accepted by an authorized person. An often seen approach for decision finding is the start of a manual global data flow analysis. If a potential injection vulnerability is reported for a module, all consumers of that module are checked manually for possible exploits. If the consumers only provide “safe” values to the critical input parameters, the module itself is considered “secure” and the reported vulnerability will be accepted:

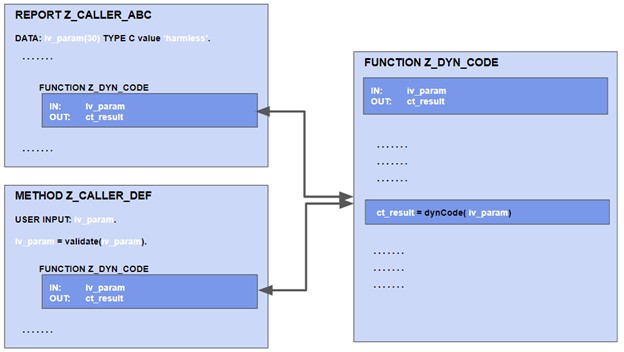

In the above example, the finding for the potential Injection vulnerability in the function module Z_DYN_CODE is accepted because the consumer Z_CALLER_ABC provides exactly one fixed value and the consumer Z_CALLER_DEF validates the user input before it is provided to Z_DYN_CODE. This approach completely ignores that there might be new consumers in the future like the program Z_CALLER that might provide unsecure or unvalidated input values to Z_DYN_CODE (either unintended or intentionally).

In a perfect world, developers should obviously only call external modules that are released for public use (APIs, SAP BAPIs, etc.). Security considerations for such modules usually take into account that there could be an unpredictable number of (uncontrollable) consumers and therefore the (B)API module itself must ensure security.

Non-released external modules could be subject to incompatible changes or they are deleted without warning. As shown above, there is also a security risk related to these modules since security decisions are often made based on their current consumers.

A popular example for this is SAP. The Onapsis Research Labs sometimes reports vulnerabilities in non-RFC enabled function modules or in class methods. Most of these reported vulnerabilities are ultimately rejected by SAP and not patched. The reason for rejection is always the same:

“We have checked all our SAP internal consumers and they only provide secure and/or validated values to the reported module. Since the module is not released for customers and since it can not be called from external, any reference to it in custom code is at the customer’s risk and the consumer is responsible to implement appropriate measures to ensure security”.

In contrast to RFC-enabled function modules, SAP does not take into account here the risk of an intentional exploit. Therefore, it is an easy task for an inside attacker to inject a vulnerable report into production without being noticed by tools that perform checks using local control flow analysis. They just have to identify and call one of these vulnerable SAP function modules that provide dynamic code:

Global Data Flow

Through technology that has been patented, Onapsis’ C4CA processes a global data flow analysis. A global data flow analysis takes all called modules into account, independently of whether they belong to the same compilation unit as the consumer or not. This reduces the number of false positives and false negatives significantly. Another important aspect of a global data flow analysis is that it allows a much more granular finding management.

An Injection finding in C4CA is uniquely identified by the source of the data that is provided to the dynamic code and the data sink – that is the source code line of the dynamic code. There can be multiple data sources connected to the same data sink. Tools performing a local data flow analysis interpret exactly one location as the data source, usually an input value in the interface of the checked module.

Example:

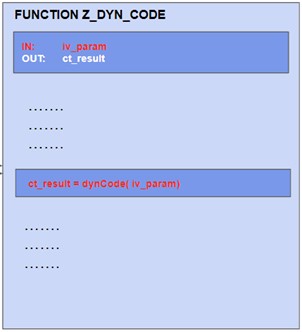

Whenever the vulnerable module Z_DYN_CODE is scanned as part of its compilation unit, its vulnerable character is detected and uniquely identified by the red source code lines. This is independent of any existing consumers.

In contrast to other tools, C4CA reflects the fact that it is only a potential Injection vulnerability in the rating of the finding.

If there is a vulnerability scan of a new consumer in the future, a global data flow analysis will scan the called module Z_DYN_CODE again, independent of whether it belongs to the same compilation unit or not and independent of whether someone has accepted the previous finding on the Z_DYN_CODE scan or not.

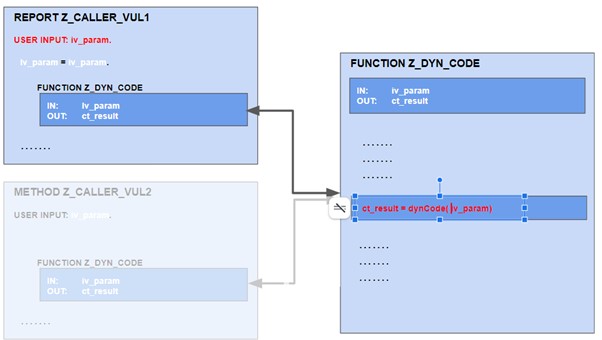

Two examples leading to new individual findings:

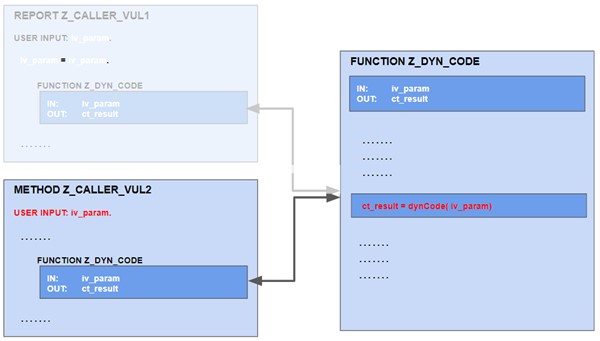

When scanning the program Z_CALLER_VUL1, C4CA recognizes a definite Injection vulnerability since the dynamic code in Z_DYN_CODE is definitely based on user input in Z_CALLER_VUL1. C4CA therefore generates a new finding and rates it with Flaw. The author of the program can now either notify the owner of the function module Z_DYN_CODE and ask for mitigation or they can implement their own mitigation in the program before calling Z_DYN_CODE. The same applies to method Z_CALLER_VUL2.

Summary

Code security tools have to process a data flow analysis to identify vulnerabilities like SQL Injection, OS Command Injection, Code Injection, and Directory Traversal.

The Market leading solution, Onapsis C4CA and other tools in the market follow different approaches with regard to this data flow analysis and the resulting finding management. While some tools only start a local data flow analysis, C4CA optionally executes a global data flow analysis.

A local data flow analysis is an acceptable approach if potential vulnerabilities are always immediately mitigated. The decision to accept a potential vulnerability must never be made by just checking the actual consumers. Future consumers might call the vulnerable module in a non-secure way, either because of a lack of know-how or by malicious intention.

Onapsis C4CA’s global data flow analysis ensures that any new data flow path that is introduced, e.g. by a new or changed consumer, is analyzed again completely so that the owner of the consumer is informed about critical situations and can initiate their own mitigation measures.